A no-code ML Ops Platform for programmatic data annotation

In 2022 my team and I were hired for a 16 week engagement in support of a big four accounting firm in New York City.

Background.

MLOps (“machine learning operations) is a practice for collaboration and communication between data scientists and operations professionals to help manage production machine learning (ML), or deep learning lifecycles.

Similar to the DevOps or DataOps approaches, MLOps looks to increase automation and improve the quality of production ML while also focusing on business and regulatory requirements. While MLOps also started as a set of best practices, it is slowly evolving into an independent approach to ML lifecycle management.

MLOps applies to the entire lifecycle — from integrating with model generation (software development lifecycle, continuous integration/continuous delivery), orchestration, and deployment, to health, diagnostics, governance, and business metrics.

Data Annotation.

Legal documents bind companies and people but over time become incredibly lengthy, wordy, and complicated. As companies sell, merge, and evolve, the language that governs them becomes increasingly

Within industries like the financial and legal sectors, data annotation is essential for tasks like document classification, sentiment analysis, fraud detection, and compliance monitoring. Organizations and firms like this client have the need to access, label, and process large amounts of structured (tabular) data and unstructured data (photos, video, audio) to find what is known as ground truth within legal documents of the companies they are auditing and providing services for.

Annotation refers to this process of labeling data like documents or images, for example. This is typically done manually by hand, which is known as a human-in-the-loop process which involves highly mundane and tedious processes such as drawing bounding boxes around images or highlighting text in documents in order to identify “ground truth.” It in many cases requires experts to mediate the consensus of many of these highly dated, sophisticated, or obscure documents or images.

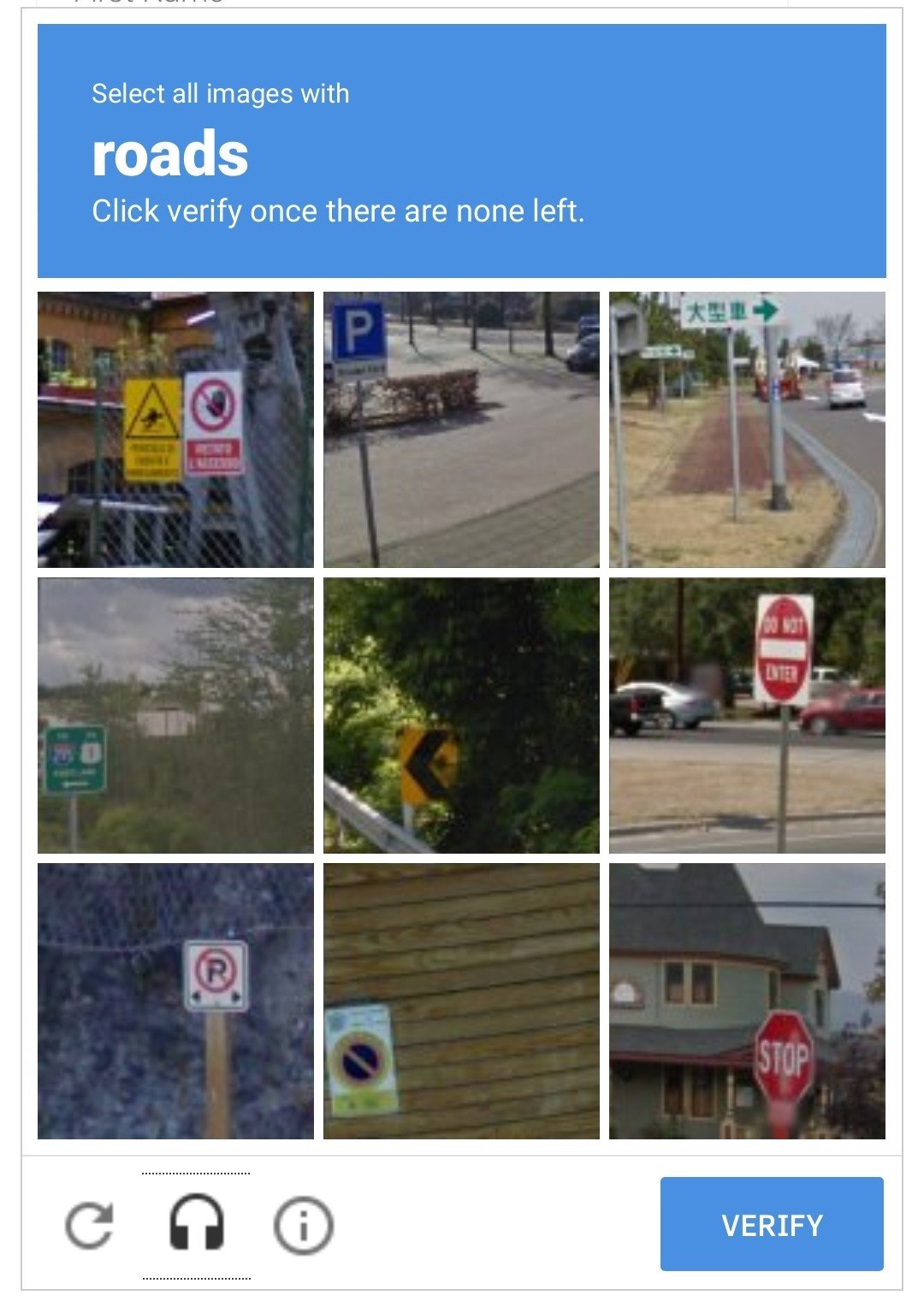

Fun Fact: Companies like Google actually use the public (ie. you and me) to manually annotate images to improve their self driving algorithms and artificial intelligence models via CAPTCHA, which is a test to determine if an online user is really a human and not a bot (see image 3 in the carousel).

The Challenge.

There was a need to enable non-technical project managers to take a project from conception to delivery via no-code processes

The need for reusability and accessibility of historical knowledge within the process via advance data engineering capabilities

The product must offer convenience and practicality to the users, demonstrating a clear and repeatable process for reducing total workload and stress to a given staff member for data exploration, labeling, and management

Elevator Pitch.

“Create a no-code platform where both technical and non-technical team members can quickly set up and build, train, tune, deploy and tune machine learning models for any use case with fully managed infrastructure, tools, and workflows. Faster data annotation, with immediate engagement spin up time and results delivered in half the amount of time.”

Platform Vision.

No- to low-code data annotation platform that enable users to annotate and label data with minimal or no coding required.

A one-stop-shop for solution managers and PMs to manage annotation workloads across annotators and labelers

A place for data scientists to conduct and apply exploratory data analysis measures on their data”

Enable non-technical project managers to set up and deploy a model

Enable business/domain experts to scale AI solutions independently, thus increasing time to delivery

Enable teams to design, build, and deploy scalable, secure, solutions and products at a speed, cost, and degree of accuracy not achievable through manual effort alone

Unify technology & human expertise and solve complex business problems

The Team.

As the lead product strategist, I served as the primary point of contact and consultant responsible for leading a hybrid and cross-functional team of UI/UX designers, project managers, and solutions architects alongside the Client’s team of decorated PHD data scientists, partners and advisors, solution owners, engineers, and project managers.

Value Proposition.

Faster data annotation, with immediate engagement spin up time and results delivered in half the amount of time

Enable non-technical project managers to set up, deploy, monitor, and tune a model without using code

Updating and changing annotations (as they grow and there are new definitions)

Ability to easily deploy, monitor, and update new models on-demand

Standardized visualization of model confidence (scoring of individual results)

Re-usability of AI models

Visual solution pipelines (DAGs) to drag/drop components and ‘visualize’ your system

Provide clients and users with pre-baked visualizations, reporting, and standard analytics

Search, interrogate, save queries

The Market.

The global data annotation tools market size is expected to reach USD 3.4 billion by 2028 (according to a report by Grand View Research). The market is expected to expand at a CAGR of 27.1% from 2021 to 2028.

The annotation and data science services that the Client offered generated tens of millions of dollars in annual recurring revenue (ARR) for the firm and spanned multiple industries such as banking and financial services, fraud litigation, oil and energy, and healthcare.

Use Cases.

Product Use Cases in the Legal Industry

Document Classification: Law firms and legal departments can use low-code annotation platforms to classify legal documents by type (e.g., contracts, court filings, or briefs). This simplifies document management and retrieval.

Entity Recognition: Annotating legal texts for entities such as names, dates, and locations is essential for legal research and information retrieval. Low-code annotation platforms can streamline this process.

Case Outcome Prediction: Legal professionals can use these platforms to annotate historical case data, including case details, rulings, and outcomes. This annotated data can be used to build predictive models for case outcome analysis.

Mergers and Acquisitions: Tax, audit, and legal firms are hired to use OCR tools with annotation engines to consolidate, analyze, and find common truth in documents and other files regarding legalities, debts, and other subjects

Product Use Cases in the Financial Industry

Fraud Detection: Low-code data annotation platforms can be used to label transaction data for training fraud detection models. These platforms enable rapid data labeling, helping financial institutions identify suspicious activities more efficiently.

Sentiment Analysis: Financial companies can use these tools to annotate social media data, news articles, and customer feedback for sentiment analysis. This information can be valuable for investment decisions and risk assessment.

Compliance Monitoring: Compliance documents, such as legal agreements and regulatory texts, can be annotated for specific clauses and terms. This aids in ensuring that financial institutions adhere to legal requirements.

Productization Workshop Goals.

Convert a services style offering (time and material based) to a structured, productized solution that can more easily scale

Define what capabilities and features are useful for Clients

Define what capabilities and features are useful for users

Ideate and “productize” various user capabilities into distilled features

Map technical architecture strategy and frameworks/stack for scalability, performance, and success

Map the data pipeline under a common data model

Create a product strategy and roadmap for future phases of development

Understand the context of the product ecosystem (customers, product lifecycle, sales cycle, ROI, users, challenges)

De-risk future investment decisions for stakeholders

Design Sprint Goals.

Create low-fi sketches of the various interface screens of the envisioned solution

Match the end-to-end user flows with the envisioned screen designs

Create and implement a custom visual design system and branding guideline for the product

Create a visual framework for users to follow when using the platform

Create a human centric, user interface (UI) and user experience (UX) via high fidelity wireframes and clickable prototype in Figma

Ensure interface and experience is intuitive, seamless, and highly usable

Features.

Consumption View

Analysis Trends and a continual ingestion feedback loop

Visualization View

See all models, where it is in production, what is where, who is with it, changes they’ve made/notes

Tracking and Analytics

Telemetry data, data drift, annotation performance, data science practices

KPIs, user activity, and user feedback

Workshop Outputs.

Create user personas profiles for each relevant end-user type

Define goals and pain-points for each user

User journeys (maps the actions, activities, thoughts, behaviors of each user) throughout their process of using the product

Distilled product features written as Epics and user stories

Value Effort Matrix for features

MVP Product roadmap

Qualitative Research -

stakeholder interviews.

We kicked off with stakeholder interviews to understand a few key details including:

Project timelines

Scope of the product

Trends in the market

Expected type and quality of deliverables

Company and leadership OKRs

Resource constraints and limitations (budgets, team, resources, etc.)

Project risks

To establish an effective way of working and communicating together

Qualitative Research, end-user interviews.

What: 12 remote interviews with guided questionnaire style scripts

Who: 4 persona user-types, 3 interviews per persona

data scientist, project manager, data labeler, annotator

Goal: Validate assumptions and collect new insights about target users

Qualitative Research - Demos

Conducted detailed demos and analysis of existing and previous technologies, tools, and systems used in the project process and day-to-day workflows.

Demos included:

Previous prototype application

Current annotation user interface

Securities Analyzer, a patented OCR tool for data ingestion

The Problem.

The current annotation process is not clear

Users rely on data engineers for what could be drag-and-drop

The current product did not integrate repeated processes into an intuitive and automated design interface and user experience

Bespoke product deployment environments are expensive to build and difficult to maintain

ATCP, SAT, and other deployment procedures are time consuming.

Existing solution composed of various antiquated processes and systems that lack project visibility

Established QA/QC best practices and accelerators that were not in use

A need to simplify and democratize aspects of the modeling work in order to amplify the workforce

Users.

A.I. enablers - ie. solution owners

Data scientists

Client - the tax/audit firm hired for the purpose of annotation and data labeling)

Project Managers - handles the project set up, user assignment, work assignment and review, and guideline management

SMEs - legal domain experts and subject matter experts

Data Labelers - the user creating annotations and labeling data associated with snippets and document files

The Solution.

An internal and client facing ML ops workbench and AI factory for information extraction from data ingestion, data enablement, data processing, exploratory data analysis (annotation/ground-truth labeling) and data labeling through to model set-up, development, deployment, and solution monitoring in production.

“Ignite will be a place where team can collaborate, data scientists come to build, domain experts can set up projects and monitor projects. Solutions will be built on top of Ignite.”

Client Deliverables.

Independent competitor research analysis

User Persona Profiles

User Journey Maps

Information architecture

Value Effort Matrix for user stories

MVP product specification

Low-fidelity wireframe sketches

Usability Testing and User Feedback sessions

Product improvements and suggestions based on UI/UX feedback sessions

Hi-fidelity clickable prototype for the entire platform

Evolved component library and style guide for the platform

Comprehensive engagement Findings Report

Product Goals.

Manage quality and consistency of AI solutions

Create a more unified interface and seamless design for the existing product

Create an integrated experience for all users; streamlined for efficiency

Create a data-focused experience to clarify business goals and map them to technical tasks/models

Productize and streamline a services driven offering for various data annotation style engagements

Make the process of reviewing document annotations smoother

Enable expanded search capabilities for viewing data

Internal Benefits.

Easily build, manage, deploy new AI solutions at scale

Improved project transparency and progress visibility for both clients and staff

Enhanced team workflow efficiency

Reduction in mundane and repetitive tasks for data annotators

Reduction in data entry and duplication for team members

Improved team and client communication, collaboration

Organizational Benefits.

“Faster annotation, with immediate engagement spin up and results delivered in half the amount of time”

Improved annotation project timeliness and shortenend throughput length

Reduced annotation costs

Decreased time-to-delivery for AI solutions

Increased profit margin for AI solutions

Greater volume of AI solutions sold

Improved project data security and optimization, reducing third party risk

Project Managers

Roles & Responsibilities

Handles the project set up, user assignment, work assignment and review, and guideline management

Responsible for end-to-end project set up and execution

Assigns work load and manages day-to-day team operations

Sometimes, annotates and creates guidelines

Incurs up to 15 types of solutions with same pattern

PM User Stories

Assign annotation tasks at the field level so that I can guide my team

Create, title, and set up projects quickly and accurately so that I save time and effort on activity

Provision the client SFTP directly from this platform

Set reminders for outstanding tasks

Set annotation guidelines/guardrails in defining field extractions to ensure quality results

Configure annotation quality metrics like inter-annotator agreement thresholds and QC manual review thresholds

Configure the annotation setup to only allow annotations and labels that align with pre-configured rules and heuristics

Data Labelers

Roles & Responsibilities

Responsible for annotating documents according to guidelines searches for fields and extracting language

ie. performing manual data extraction and labeling of words, phrases, and paragraphs within invoices, legal documents, and records

User Stories

Assign annotation tasks at the field level so that I can guide my team

View an Arbitration queue so that the conflict can be cleared, guidelines updated, and a correct annotation agreed upon

Know which documents and fields I need to annotate

I want to annotate directly on a PDF file so that I can best incorporate context and document structure into my annotation decisions.

Have an efficient work process so that I can be more productive and help deliver solutions faster

I want have guidelines and annotation requirements documented and implemented so that annotations are consistent and accurate with SME interpretations and aligned with model task/goal

I want to get quick feedback on questions about how/what to annotate or how to interpret text so that I am not blocked during annotation

Client

Roles & Responsibilities

Review model performance and drift so that I can I can hold my solutions team accountable to the cost I paid for the solution

Advise and review, override answers via a human-in-the-loop process

User Stories

I want to easily upload my documents to the platform

I want to programmatically connect to platform and schedule batches of annotations to be performed

I want to view original source documents so that I can trace answers back to their source

I want to measure the ROI is on my project so that I can build a case for future sales engagements

I want to get answers to the questions that interest me most about my documents so that I can focus my efforts

I want to know what is required of me so that I know what level of involvement I need to have

I want to monitor the high level progress and understand key milestone so that I have exposure into the project I paid millions for

I want to have an overview of project execution so that I can see where we are at and monitor the execution

I want to receive a summary of the regulatory points of interest within my documents so that I can understand my risks

I want to have visibility into the progress of my project so that I can keep my management team up to date and comfortable with status

Conclusion

Incorporating machine learning (ML) into a production environment extends beyond merely deploying a model as an API for predictions. It involves implementing an ML pipeline capable of automating the retraining and deployment of new models. Establishing a Continuous Integration/Continuous Delivery (CI/CD) system allows for the automated testing and deployment of new pipeline implementations. This system provides the flexibility to adapt to swift changes in data and the business environment. It's not necessary to transition all processes from one level to another immediately; instead, gradual implementation of these practices can enhance the automation of ML system development and production.